I am a Senior Research Director and Principal Scientist at Xiaomi, where my mission is bringing Artificial Intelligence to the physical world. I lead research in Embodied Intelligence, with a focus on pioneering the next generation of Autonomous Driving and Robotics. My work is at the intersection of Computer Vision, end-to-end learning systems, and Vision-Language-Action (VLA) models, aimed at building intelligent agents that can perceive, reason, and act in complex, real-world environments.

Previously, I was a Staff Scientist at Wayve, where I spearheaded the development of Wayve’s VLA models for the next generation of End-to-End Autonomous Driving. Before that, as a Research Engineer at Lyft Level 5, I led the fleet learning initiative to pretrain the ML planner for Lyft’s self-driving cars using large-scale, crowd-sourced fleet data.

My work Driving-with-LLMs [ICRA 2024, over 250 citations and 500 GitHub stars] was one of the first works on exploring Large Language Models (LLMs) for Autonomous Driving; LINGO [ECCV 2024] is the first VLA end-to-end driving model tested on public roads in London; and SimLingo [CVPR 2025] won 1st place at the CVPR 2024 CARLA Autonomous Driving Challenge. My work has been widely featured in news media, such as Fortune, Financial Times, MIT Technology Review, Nikkei Robotics, and 36kr.

- Artificial Intelligence

- Computer Vision

- Multi-modal Large Language Models (LLMs)

- Robotics

PhD in Computer Vision / Machine Learning, 2015 - 2018

Bournemouth University, UK

MSc in Medical Image Computing, 2013 - 2014

University College London (UCL), UK

BSc in Biomedical Engineering, 2009 - 2013

Dalian University of Technology (DUT), China

Recent News

- June 2025: Paper SimLingo:Vision-Only Closed-Loop Autonomous Driving with Language-Action Alignment was accepted to CVPR 2025!

- Sep 2024: Keynote talk at ECCV 2024 Workshop: Autonomous Vehicles meet Multimodal Foundation Models.

- Sep 2024: Keynote talk at IEEE ITSC 2024 Workshop: Large Language and Vision Models for Autonomous Driving.

- Sep 2024: Keynote talk at IEEE ITSC 2024 Workshop: Foundation Models for Autonomous Driving.

- July 2024: Paper LingoQA: Video Question Answering for Autonomous Driving was accepted to ECCV 2024!

- June 2024: Keynote talk at CVPR 2024 Workshop: Vision and Language for Autonomous Driving and Robotics.

- June 2024: Organized the CVPR 2024 Tutorial: End-to-End Autonomy: A New Era of Self-Driving in Seattle, US.

- June 2024: CarLLaVA won the 1st place of CARLA Autonomous Driving Challenge!

- May 2024: Presented the ICRA 2024 Paper: Driving-with-LLMs in Yokohama, Japan.

- June 2023: Organized the ICRA 2023 Workshop on Scalable Autonomous Driving in London, UK.

- June 2021: Co-organized the CVPR 2021 Tutorial: Frontiers in Data-driven Autonomous Driving

- Feb 2021: Granted US patent Guided Batching - a method for building city-scale HD maps for autonomous driving

- June 2021: Two papers, Data-driven Planner and SimNet, got accepted by ICRA 2021

- June 2020: We released the Lyft Level 5 Prediction Dataset

Experience

AV2.0 - building the next generation of self-driving cars with End-to-End (E2E) Machine Learning, Vision-Language-Action (VLA) models.

[CVPR 2024: End-to-End Tutorial] [ICRA 2023: End-to-End Workshop]

Autonomy 2.0 - Data-Driven Planning models for Lyft’s self-driving vehicles.

[CVPR 2021: Autonomy 2.0 Tutorial] [ICRA 2021: Crowd-sourced Data-Driven Planner]

Featured Publications

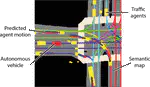

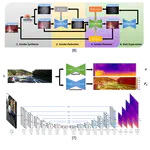

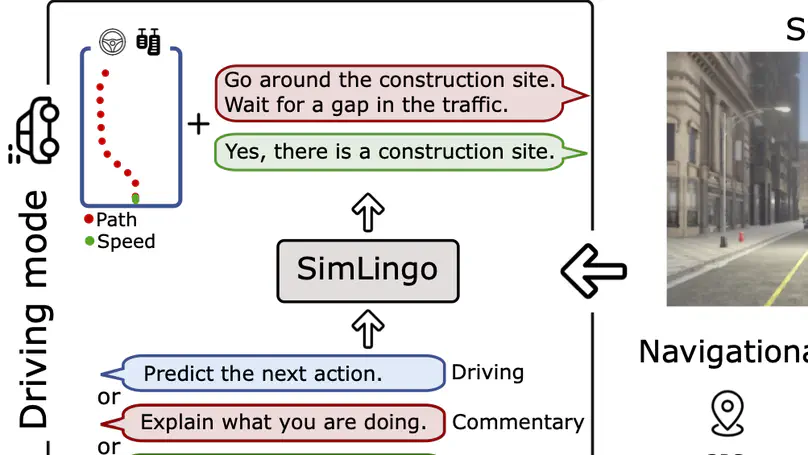

Integrating large language models (LLMs) into autonomous driving has attracted significant attention with the hope of improving generalization and explainability. However, existing methods often focus on either driving or vision-language understanding but achieving both high driving performance and extensive language understanding remains challenging. In addition, the dominant approach to tackle vision-language understanding is using visual question answering. However, for autonomous driving, this is only useful if it is aligned with the action space. Otherwise, the model’s answers could be inconsistent with its behavior. Therefore, we propose a model that can handle three different tasks: (1) closed-loop driving, (2) vision-language understanding, and (3) language-action alignment. Our model SimLingo is based on a vision language model (VLM) and works using only camera, excluding expensive sensors like LiDAR. SimLingo obtains state-of-the-art performance on the widely used CARLA simulator on the Bench2Drive benchmark and is the winning entry at the CARLA challenge 2024. Additionally, we achieve strong results in a wide variety of language-related tasks while maintaining high driving performance.

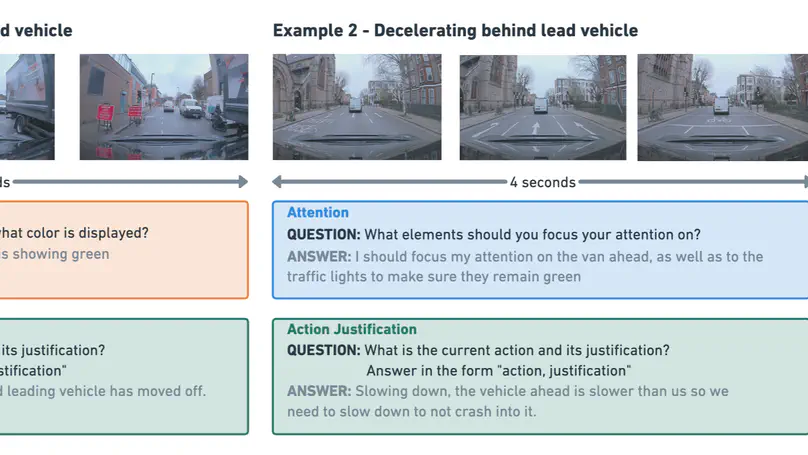

Autonomous driving has long faced a challenge with public acceptance due to the lack of explainability in the decision-making process. Video question-answering (QA) in natural language provides the opportunity for bridging this gap. Nonetheless, evaluating the performance of Video QA models has proved particularly tough due to the absence of comprehensive benchmarks. To fill this gap, we introduce LingoQA, a benchmark specifically for autonomous driving Video QA. The LingoQA trainable metric demonstrates a 0.95 Spearman correlation coefficient with human evaluations. We introduce a Video QA dataset of central London consisting of 419k samples that we release with the paper. We establish a baseline vision-language model and run extensive ablation studies to understand its performance.

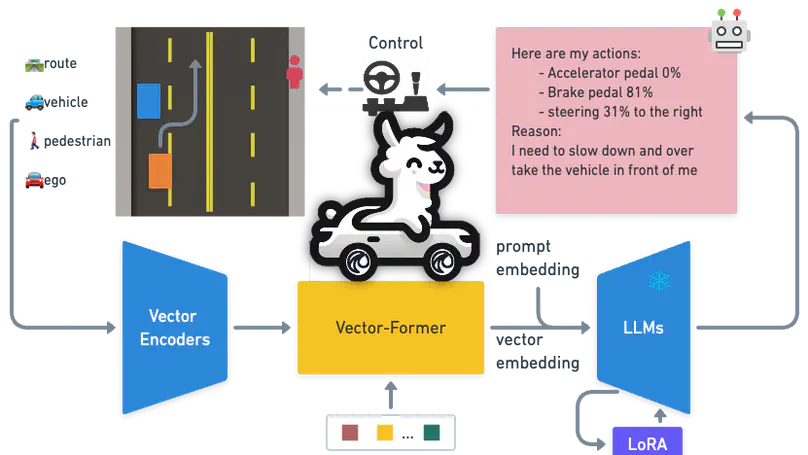

Large Language Models (LLMs) have shown promise in the autonomous driving sector, particularly in generalization and interpretability. We introduce a unique object-level multimodal LLM architecture that merges vectorized numeric modalities with a pre-trained LLM to improve context understanding in driving situations. We also present a new dataset of 160k QA pairs derived from 10k driving scenarios, paired with high quality control commands collected with RL agent and question answer pairs generated by teacher LLM (GPT-3.5). A distinct pretraining strategy is devised to align numeric vector modalities with static LLM representations using vector captioning language data. We also introduce an evaluation metric for Driving QA and demonstrate our LLM-driver’s proficiency in interpreting driving scenarios, answering questions, and decision-making. Our findings highlight the potential of LLM-based driving action generation in comparison to traditional behavioral cloning. We make our benchmark, datasets, and model available for further exploration.

Mixed reality (MR) is a powerful interactive technology for new types of user experience. We present a semantic-based interactive MR framework that is beyond current geometry-based approaches, offering a step change in generating high-level context-aware interactions. Our key insight is that by building semantic understanding in MR, we can develop a system that not only greatly enhances user experience through object-specific behaviours, but also it paves the way for solving complex interaction design challenges. In this paper, our proposed framework generates semantic properties of the real-world environment through a dense scene reconstruction and deep image understanding scheme. We demonstrate our approach by developing a material-aware prototype system for context-aware physical interactions between the real and virtual objects. Quantitative and qualitative evaluation results show that the framework delivers accurate and consistent semantic information in an interactive MR environment, providing effective real-time semantic-level interactions.